Maximizing computation, minimizing data

I had a Digipede customer ask me last week about how to optimize his processes for running on the Digipede Network. He is grid-enabling an existing application--he's got a class that does a lot of calculation, and he wants to have a bunch of those objects calculating in parallel. Each object is already independent.

Sounds perfect for distributed execution, right? "Twenty lines of code" away from being Digipede-enabled?

Well, not quite.

See, the objects that he does his calculation on are pretty darn big--on the order of 20-25 megs each. He actually had no idea they were so big; but the class has lots of members, and many of the members are large classes themselves. Now, the Digipede Network can certainly move objects of that size--but those objects have to be moved in order to calculate on them, and moving data is often more time consuming than the computation you need to do on it. (See Jim Gray's Distributed Computing Economics to get an idea of what that means, but bear in mind that we are only discussing LAN-wide computation here).

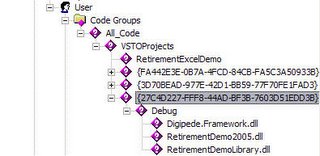

The answer can frequently be to create a class that contains only the data relevant to the distributed calculation. In the case with this customer, he was "retrofitting" the application to work on the Digipede Network. His class wasn't designed for distribution and, as a result, had a lot of data in it that wasn't necessary for the calculation that he wanted to happen remotely. In other words, his objects did a lot in their lifetime, only a portion of which was going to be distributed.

The customer needed to create a class that contained only the data that was relevant to his distributed process, and use that as a member in his huge class. Only this new, smaller class gets distributed across the network. Instead of moving 20MB per object, he was now moving only a few kilobytes. When the small class returns from its journey across the network, its result data is then copied back into the main object.

Our customer needed to do a bit more work than the fabled "twenty lines of code"--but he ended up with a more structured application and vastly improved performance.